- Home

- Users & Science

- Scientific Documentation

- ESRF Highlights

- ESRF Highlights 2017

- Enabling technologies

- Modernising the ISpyB application with docker and continuous integration

Modernising the ISpyB application with docker and continuous integration

Docker containers and continuous integration are being used at the ESRF to modernise and upgrade the ISPyB web application, helping software development and IT teams work together more efficiently.

ISPyB (Information System for Protein crystallographY Beamlines) is an information management system for synchrotron macromolecular crystallography beamlines, developed as part of a joint project between the ESRF Joint Structural Biology Group (JSBG), BM14 (e-HTPX) and the EU-funded SPINE project. The ISPyB web application is developed with Java technology, running on a JBoss application server, and supports both MySQL and Oracle databases.

Running since 2012, the project requires the input and collaboration of a number of teams at the ESRF and updating the application can often be a complicated and lengthy process. New features to be implemented by the software development team often require a new version of the server to be installed by the IT team, which itself necessitates a reinstallation of the operating system, obtaining a spare machine in case a rollback is needed, planning this with network team, backing up the data, etc. Unsurprisingly, the requirements of flexibility, stability, control and efficiency, are often in conflict.

To resolve this, a new set of computing practices, known as DevOps, have been used to automate the processes between software development and IT teams, in order that they can build, test, and release software faster and more reliably. The concept of DevOps is founded on building a culture of collaboration between teams that historically functioned in siloes. The promised benefits include increased trust, faster software releases, ability to solve critical issues quickly, and to better manage unplanned work.

The solution chosen to implement this was docker containers, which changes the way software is built and delivered. When an application is packed into a docker container, it is packed with part of the Operating System (OS) that is needed by the application. A Dockerfile is then written: documentation describing how to install a required program on a real machine. As a result, the container is built with simple docker commands and can be run everywhere: from a laptop to Amazon cloud services, the ESRF data centre or a beamline workstation, no matter which Linux version the machine is running.

|

|

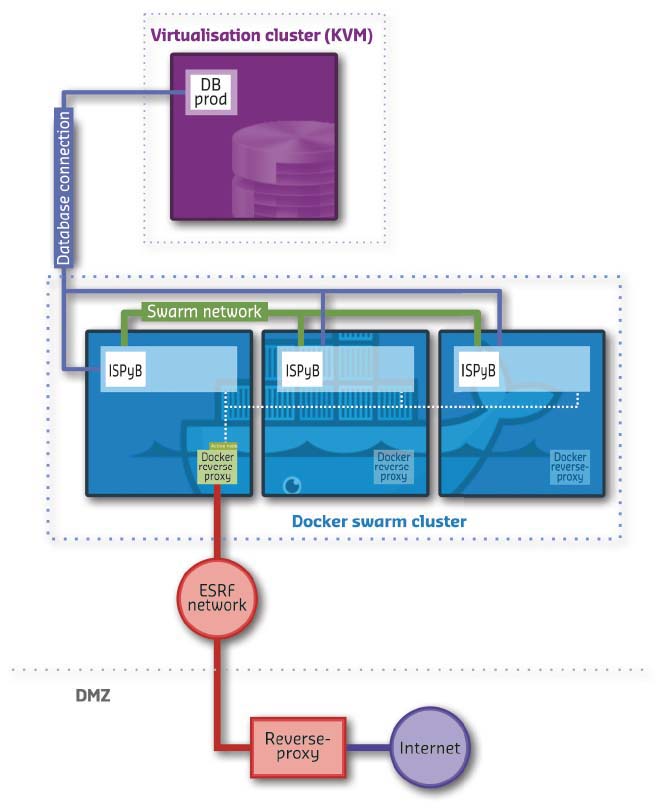

Fig. 155: Architecture diagram of the ISPyB application on the docker swarm cluster. |

It also offers a clear separation with the data needed for the container (or application). The containers can be given versions, so that rolling back or testing new versions of the application can be done in a matter of seconds. Docker also offers cluster features (called swarm) where automatic failover is available, and the application can be scaled up to handle more requests. This proved to be necessary for ISPyB as the JBoss server had stability issues. Docker is now able to restart the application without human intervention. Docker containers should also eliminate application error messages that arise due to the use of old or new libraries that are not compatible with the system. There are also plenty of pre-built applications provided through Docker Hub. For instance, regarding ISPyB, pre-built containers are used with the right JBoss server version, customised to fit in-house needs (Figure 155).

|

|

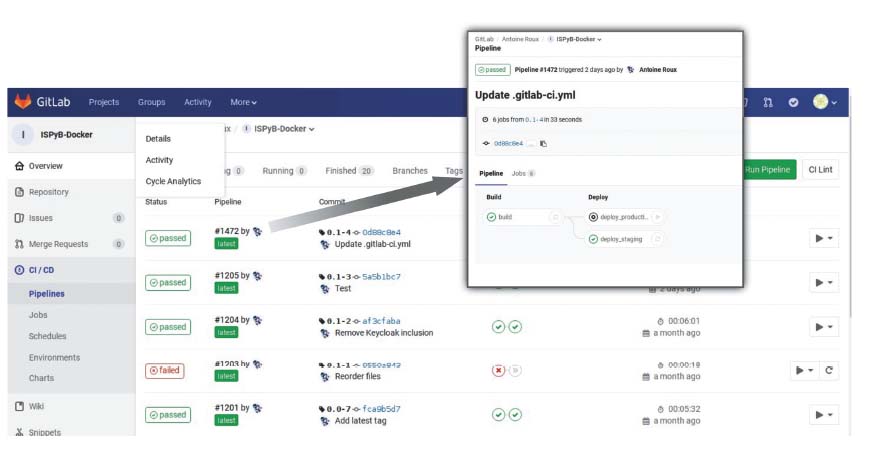

Fig. 156: Gitlab web interface showing the update process for the application on the production infrastructure. |

This initiative can be extended further with continuous integration. Continuous integration is the process of automating the compilation, linking, and testing of code every time a team member commits changes to version control. This means that each time code is committed, a new container is built automatically. The software development team also has the ability to push the newly built container into production without intervention from the IT team (Figure 156). This is very useful as, with these methods, each team can focus on their main activity; the IT team can maintain an infrastructure with high availability, scaling abilities, monitoring, security, and high performance, and the software development team can focus on code and deliver faster, while also having full control over the release cycle in a simple way.

Docker containers and continuous integration have been successfully implemented into the workflow of the ISPyB team and now run smoothly. Of course there is a cost for all this: new tools have had to be mastered and new working methods implemented but the end result is that several teams now work together in a much more efficient manner. Container technology is not ‘one tool to rule them all’ but can be considered as a disruptive technology that helps deliver software in a constantly moving research infrastructure. Many major companies such as OVH, Amazon and Google are widely using these technologies today, and several initiatives have also been launched to try to adapt container techniques for very specific usage like scientific software on compute clusters, showing that this technology is only the beginning.

Author

B. Rousselle. ESRF