- Home

- Industry

- Industry news

- Tomography data...

Tomography data segmentation aided by machine learning

14-02-2022

Researchers from MIT have trained a deep learning system to identify damage induced in aerospace-grade carbon-fibre composites within a tomography dataset. The system performed brilliantly and even identified regions in the tomogram that had been incorrectly classified by manual segmentation.

Share

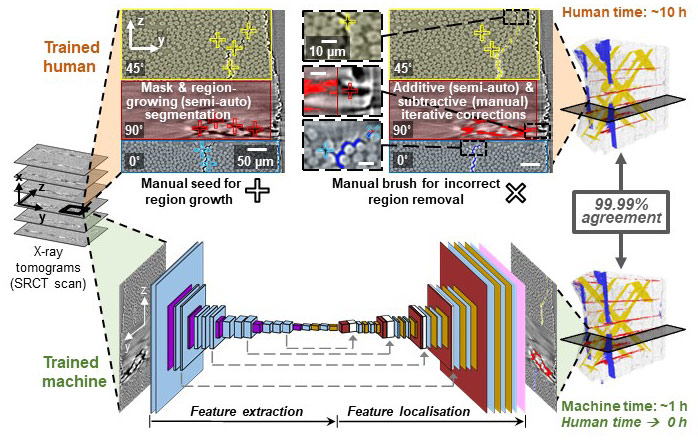

Following synchrotron radiation computed tomography (SRCT) data collection at the ESRF, users typically carry out 3D volume reconstruction using ESRF routines, and then segment the data, i.e. identify regions of interest, in a highly labour-intensive manual or semi-automated way. In a publication released today, researchers at MIT describe how they trained a machine learning system (specifically a deep learning (DL) artificial intelligence (AI) model) based on a convolutional neural network (CNN) to identify regions of damage induced in aerospace-grade carbon-fibre composites. Figure 1 presents an overview of their approach.

|

|

Figure 1. Overview of segmentation showing the methodology for manual identification of regions of interest and comparison with the trained CNN machine. |

The X-ray computed tomography dataset was 4D, i.e. spatial and temporal data, of damage progression within nanoengineered carbon fibre composites under load. It was collected at beamline ID19 by the MIT group in collaboration with the Univ. of Porto (Portugal) and the Univ. of Southampton (UK). The tomography data revealed damage progression inside the materials on increasing load. This experiment has already been described in an ESRF news item: Tomography provides quantitative measure of damage progression in carbon fibre composites under load.

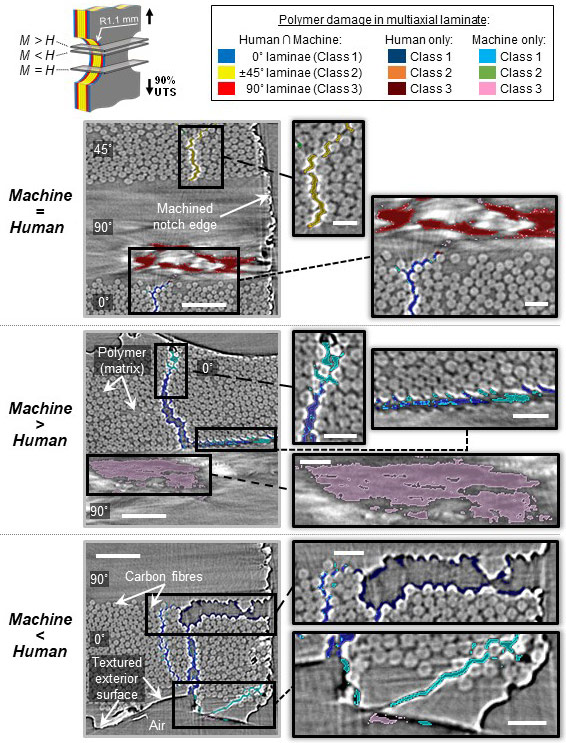

The CNN machine was trained using 65,000 human-segmented tomograms. Machine segmentation results were proven via 2D and 3D quantitative and qualitative analyses to be in excellent agreement (~99.99% class binary accuracies on validation and test datasets) with time-intensive, subjective human-driven semi-automatic segmentation methods, while concurrently introducing significant improvements in efficiency and consistency, and in some cases improving upon the trained-human identification of damaged regions. Figure 2 presents examples of machine vs human segmentation, comparing their ability to classify damage.

|

|

Figure 2. Examples of machine vs. human segmentations where: (top) the machine performs similar to the human, (middle) the machine outperforms the human, and (bottom) the machine underperforms the human. Scale bars, left 50 µm, right 20 µm. |

Such a CNN machine has potential to accelerate discovery within new datasets and it almost eliminated the time-consuming human effort typically associated with segmentation of the data. Reed Kopp, first author on the paper, explained, “For each 3D scan, what we used to do in 10 hours we can now do in 1 hour, and without human intervention. For example, for the 30 scans captured as part of a multi-day beamtime mission, it takes 60 working days for a human vs 2 days for a computer to deal with the data”.

Following the ESRF-EBS upgrade, the ESRF’s tomography beamlines, BM05, BM18 and ID19, are all capable of collecting huge volumes of tomography data during an experimental session. In their most recent trip to the ESRF in November 2021, the authors nearly doubled the number of scans compared to previous 48-hour missions. For such a vast amount of data, manual segmentation is no longer feasible in a reasonable time frame and the newly-developed CNN systems will be key to its analysis. Brian Wardle, Professor of Aeronautics and Astronautics commented, “Now, with the Extremely Brilliant Source at the ESRF, more data is produced as experiments are taking place faster and this ties with the motivation of using AI to analyse the data and accelerate our research, and hopefully the research of others.”

Reference

Deep learning unlocks X-ray microtomography segmentation of multiclass microdamage in heterogeneous materials, R. Kopp, J. Joseph, X. Ni, N. Roy, and B.L. Wardle, Advanced Materials (2021): doi: 10.1002/adma.202107817